Achieving ultra-reliable networks in 2022: the challenge of delivering 5G and low latency

Capacity Europe 2021

This article was written to support a panel discussion at

Capacity Europe 2021

on 21 October in London. The panel is titled ‘How Digitalisation is Driving the Interconnectivity Landscape’, and it is being moderated by

Charles Orsel des Sagets, Managing Partner at Cambridge Management Consulting for Europe and LATAM. Contributions to the article were made by

Ivo Ivanov, CEO of DE-CIX International;

Tim Passingham, Chairman at Cambridge Management Consulting; and

Eric Green, Senior Partner at Cambridge Management Consulting. Thank you to everyone involved.

How the pandemic has shaped the roadmap for internet connectivity in Europe

The pandemic has brought into sharp focus the need and importance of reliable and flexible networks at home. The switch to remote working and the rapid normalcy of meetings from our desks at home, brought with it surges in internet traffic and demand for reliable, stable connections.

Networks across Europe coped well, for the most part, but there are questions hanging over the near-horizon, about how carriers will adapt and scale for both a growing remote workforce and the predicted rise of new technologies.

5G is part of the answer (but only a part) and its development and rollout will coincide with and drive innovations in IoT, autonomous vehicles, and AI. These emerging technologies also exist within larger tectonic shifts in society and culture, including increasing digitalisation, virtualisation and autonomy in services; and the beginnings of the decentralised application of blockchain technology.

As our society embraces digitalisation, and the process is accelerated by Covid-19, we ask, what are the major challenges faced by carriers heading into 2022 to deliver on twin fronts of both infrastructure demand and customer expectation?

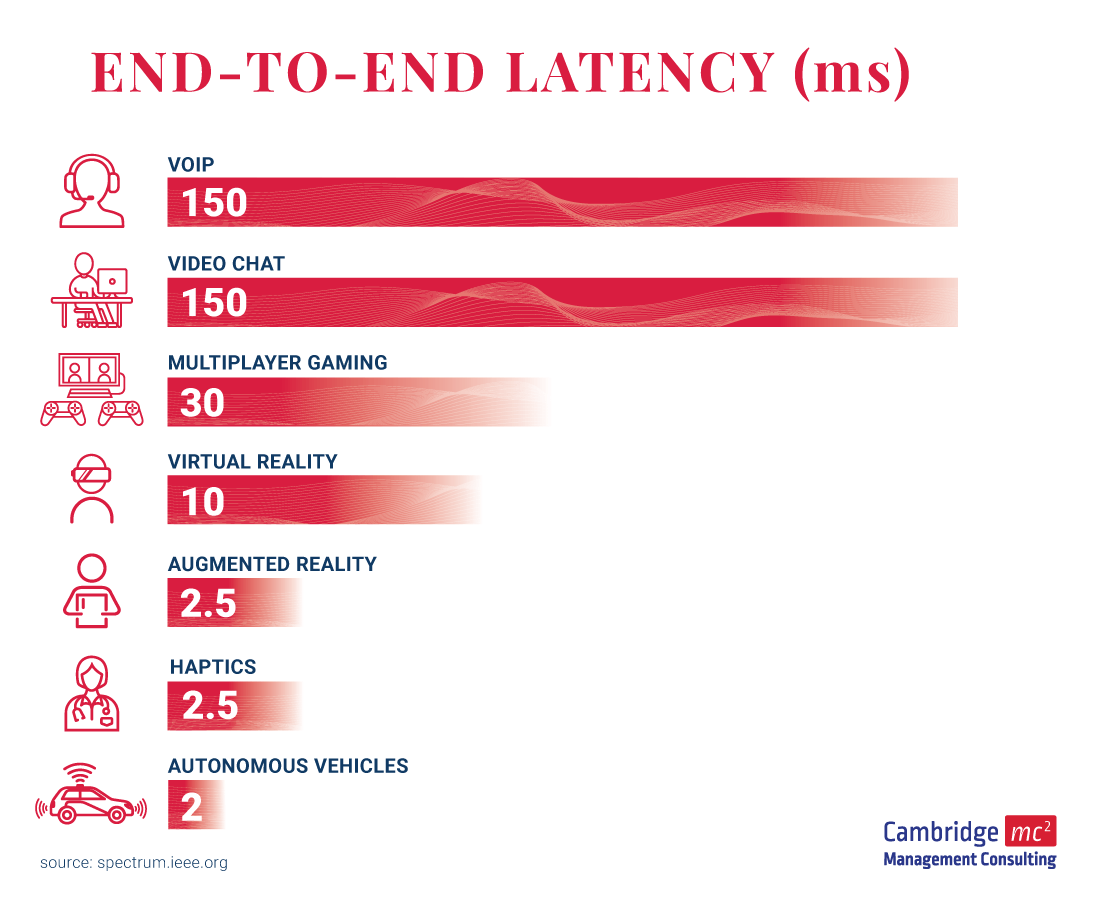

We will discuss the issue of low-latency, particularly in meeting customer expectations. These expectations anticipate the demands of video-centric content, remote working, IoT and gaming. We will also explore metrics for customer experience, and conclude with the impact of 5G technology.

Measuring Quality of Experience for internet connectivity

With a surge in internet traffic during lockdowns potentially being the start of a sustained uptick in demand, including online games and growing markets for streaming games and VR, there is a spotlight on the issue of latency as a key indicator of customer expectation.

Let us first, talk more broadly about indicators of network quality in 2021 and beyond.

Digital Equality - The widening speed gap in Europe

Europe’s internet speeds have increased by more than 50% in the last 18 months. However, this comes at the cost of widening gaps between urban and rural areas and also between Northern European countries and South-Eastern Europe.

The UK too lags behind much of its Western European neighbours when it comes to average internet speed. It was placed 47th on the list in a study conducted in 2020. In fact, the average broadband speed in the UK was less than half that of the Western European average.

The EU has a stated goal to be the most connected continent by 2030. It has already taken action to do this by ending roaming charges and introducing a price-cap on inter-EU communications. The key goal is for every European household to have access to high-speed internet coverage by 2025 and gigabit connectivity by 2030.

The elevation of internet access as a necessary human right is of course encouraging, and so are the targets set by the EU. However, for these targets to be truly meaningful, there needs to progress on a number of challenges to connectivity across Europe. Redefining the metrics we use to track this progress is also vital.

Measuring Quality of Experience (QoE)

There are a variety of problems with measuring internet speeds in a comparable way. Usually, ISPs present averages across a range of time in Mbps or sometimes the % of plan speed achieved across a range of time.

As bandwidth in many countries in Europe moves towards, and over, 100Mbps, this proxy is becoming a weaker indicator of user experience.

There are also a number of key reasons why figures published by an ISP might be misleading compared to actual user experience.

Some of these problems are as follows:

- Lab-testing of internet speed does not replicate the real-world chain of devices/hosts involved in sending and receiving packets

- Averages of Mbps ignores speeds at peak periods when the network is congested and networks throttle bandwidth

- The ‘plan speed’ does not reflect actual speeds experienced in a household where packet queuing and WiFi congestion and network affects users differently on the customer LAN

- This metric ignores latency, which is becoming a better signal of internet experience in an age of video streaming and online gaming (more on this below)

Many voices in the industry are pushing for more holistic Quality of Experience (QoE) metrics to enlarge the current set of Quality of Service (QoS) measurements.

The difference between QoE and QoS is that the latter method is comparable to measuring the success of a call centre by how many calls are concluded in a given day. This metric completely ignores whether a caller’s problem was sufficiently resolved or how satisfied the caller felt about the interaction, the ‘experience’.

Research shows that users are happy when a website loads in under two seconds (QoE). If network management is calibrated with this information, bandwidth saved can be allocated elsewhere if necessary (QoS).

Thus, one characteristic of QoE is the realisation that there are many examples where a better QoS (above a threshold) does not readily impact the user’s perception/experience of the service.

This has some important ramifications in terms of design. For example, services such as online gaming rely on low latency far more than video streaming, where buffering protocols absorb lag. QoE can be used to design SLAs and network management that are specific to the needs of an individual service.

If network providers can achieve methods of gathering QoE data, it can be used to build Autonomic Network Management (ANM) capabilities that use artificial intelligence to allow networks to achieve even more efficient network performance that reacts in real-time to user experience.

Low latency and packet loss

Bandwidth has generally been king in the history of communication networks. Low latency has generally lagged behind (pun intended) as a priority in the upgrading of networks.

What is latency?

From a QoE perspective, latency can be roughly defined as ‘the delay between a user’s action and the response of a web application’ – in QoS terms, this is the time taken for a data packet to make a round trip to and from a server (round trip delay).

Latency is affected by many variables, but the main four are:

- Transmission medium: The physical path between the start and end points i.e. a copper-based network is much slower than fibre-optic.

- Network management: The efficiency of routers and other devices or software that manage incoming traffic

- Propagation: The further apart two nodes are in the network will affect latency. For every 100 miles of fibre-optic cable it is estimated this adds 1ms of latency

- Storage delays:

Accessing stored data will generally increase latency

Jitter

There are two types of latency issue.

One is the ‘lag’ (delay) we defined above, and the other is ‘jitter’, the variations in latency that can make connections unreliable. Jitter is usually caused by network traffic jams, bad packet queuing and setup errors.

Packet loss

Impacting the QoE ‘perception’ of latency is also packet loss. Packet loss occurs when packets of data do not reach their intended destination. It is commonly caused by congestion and hardware issues —the issue can be more frequent over WiFi where environmental factors and weak signal are factors. The effect of packet loss is worse for real-time services such as video, voice and gaming. Packet loss is also worse in networks where there are no TCP protocols to retrieve and re-send packets that have dropped.

Why is low latency so important now?

“All areas of business and private life rely more heavily today than ever before on digital applications. The latency-sensitivity of these applications is not only a hallmark of quality and guarantee of commercial productivity, but also – in critical use cases, a lifeline”

—Ivo Ivanov, CEO of DE-CIX International

Recent technological innovations all tend to require lower latency. Cloud applications, mobile gaming, virtual/augmented reality, and the smart home rely on real-time monitoring and fast signal to action responsiveness. The growth of IoT and a world of interconnected sensors dictate that networks have a consistently low latency that is less than human reaction speeds.

- Human beings: 250 milliseconds responding to a visual stimulus

- 4G latency: 200 milliseconds

- 5G latency: 1 millisecond

Consider the safety implications when your car can react 250 times faster than you. At 100km/h the reaction speed of a human creates a reaction distance of 30m. With a 1 millisecond (1ms) reaction time, your autonomous car can break with a reaction distance of 3cm.

The relationship of latency and user experience to geography

The maximum affordable latency for a decent end-user experience with today’s general-use applications is around 65 milliseconds. However, a latency of no more than 20 milliseconds is necessary to perform all these daily activities with the level of performance that everybody deserves. Translating this into distance, this means the content and the applications need to be as close to the users as possible. Geographically speaking, applications like interactive online gaming and live streaming in HD/4K need to be less than 1,200 km from the user. But the applications that our digital future will be based on will demand much lower latency – in the range of 1-3 milliseconds. Smart IoT applications, and critical applications requiring real-time responses, like autonomous driving, need to be performed within a range of 50-80 km from the user.

How networks can reduce latency

There are a variety of ways of lowering latency. Businesses can pay for dedicated private networks and links that deliver extremely reliable and stable connections. This is also one of the few solutions that tackles performance gaps in the ‘middle mile’ (the network infrastructure that connects last mile (local) networks to highspeed network service providers) of the internet.

Any service which uses the backbone of the internet will run into problems of inefficient routing due to:

- Border Gateway Protocol (BGP) for routing (because it has no congestion avoidance)

- Least-cost routing policies

- Transmission Control Protocol (TCP): It is a blunt-tool protocol that reacts strongly to congestion and throttles throughput

SD-WAN, latency and efficient network management

One other solution is offered by the latest breed of SD-WAN software. SD-WAN operates as a virtual overlay of the internet, testing and identifying the best routes via a feedback loop of metrics. Potentially SD-WAN can limit packet loss and decrease latency by sending data through pre-approved optimal routes. MPLS does something similar, labelling traffic to ensure it is dealt with on a priority basis; but this service is more expensive than SD-WAN and its architecture is not suited to cloud connectivity.

SD-WAN is a hybrid solution, meaning that the software overlay can route traffic over a host of networks, including MPLS, a dedicated line and the internet. WAN management also includes a host of virtualised network tools that optimise network efficiency. This includes abbreviating redundant data (known as deduplication), compression, and also caching (where frequent data is stored closer to the end user).

To find out more about the range of network infrastructure and SD-WAN services offered by Cambridge Management Consulting visit our

website.

5G promises ultra-low latency

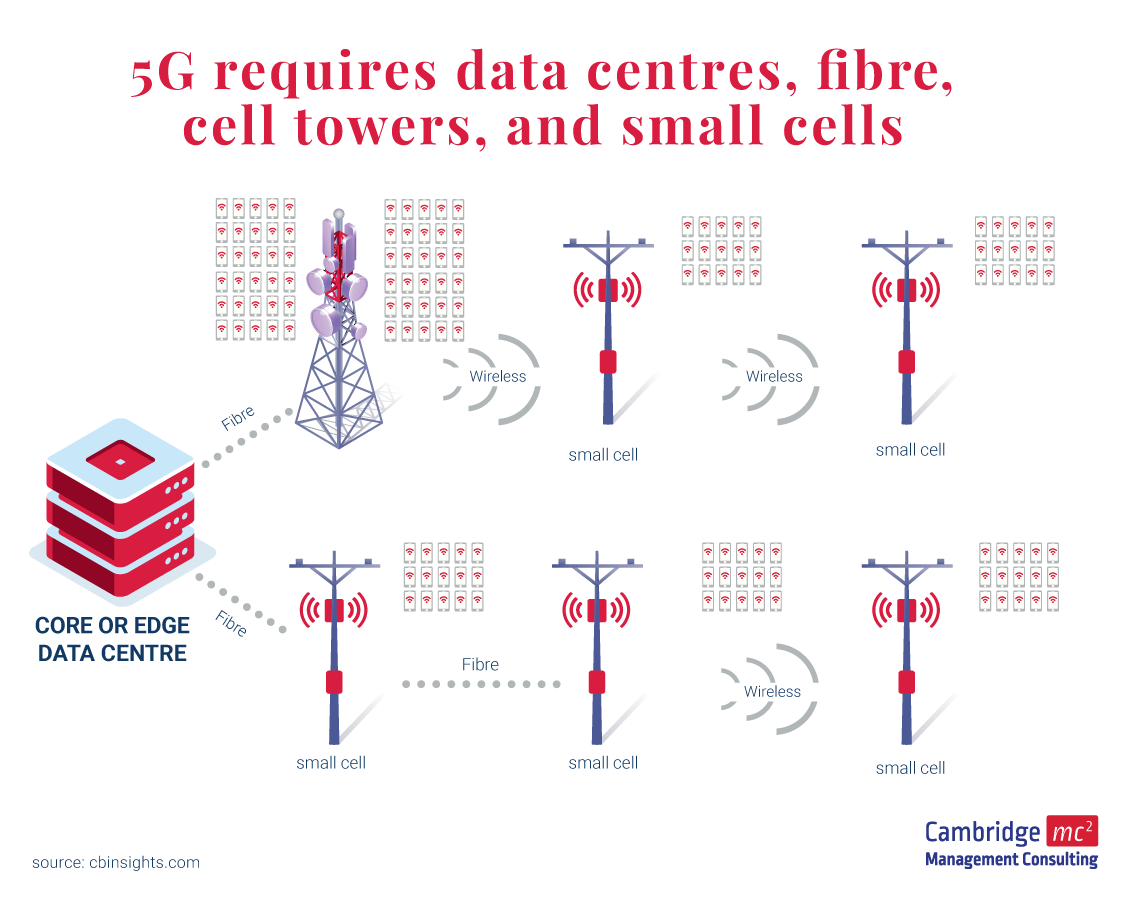

5G promises to lead us into a world of ultra-low latency, paving the way for robotics, IoT, autonomous cars, VR and cloud gaming. For this to become a reality, new infrastructure must be installed; this requires significant investment from governments and telecoms companies. Most countries need to install much more fibre to deal with the backhaul of data.

During the transition, the current 4G network will need to support 5G and there will be a combination of new and old tech, patches and upgrades to masts. Edge computing will eventually move data-centres closer to users, also contributing to lower latency. It could be many years before we see the kinds of low-latency connections that have been promised.

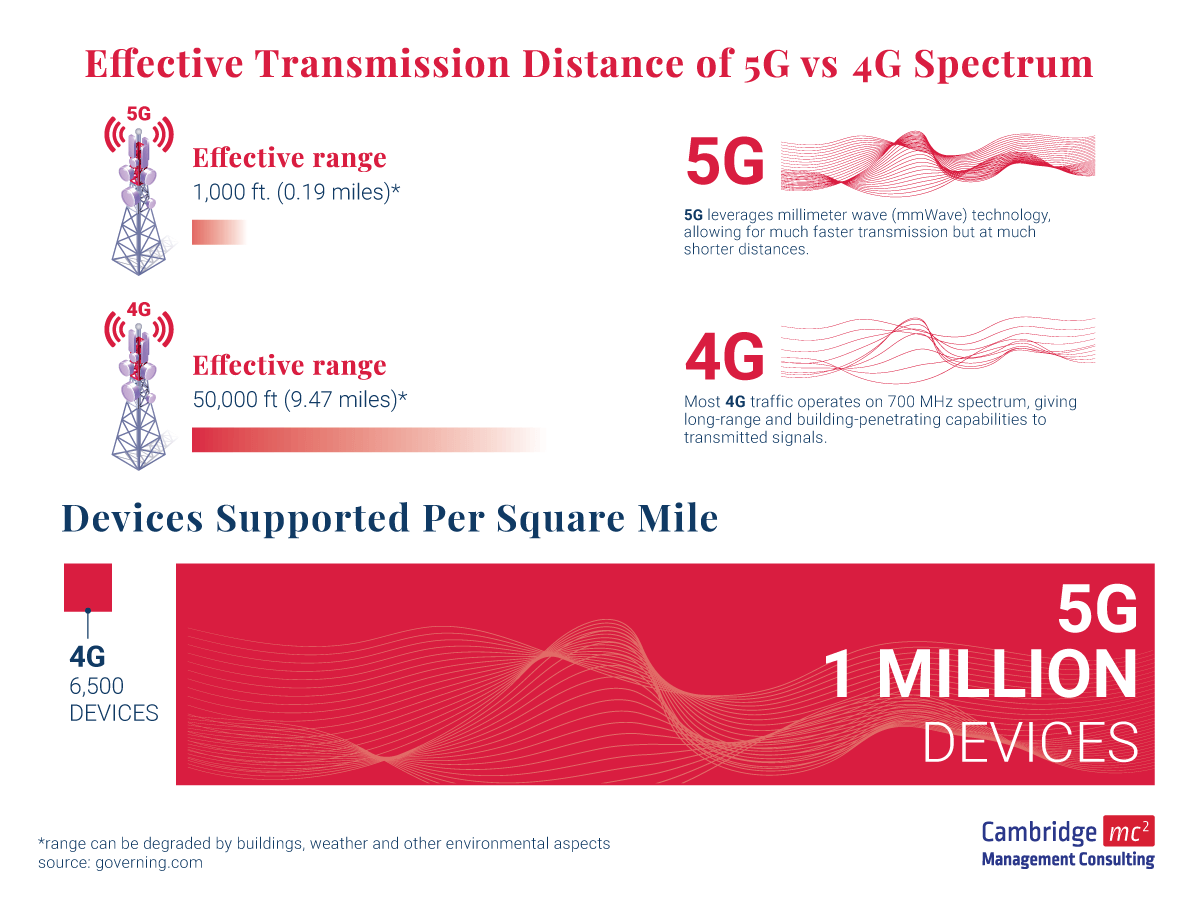

How 5G and 'network slicing' will end high latency

With the fifth generation of cellular data, gigabit bandwidth should become the norm, and the frame length (the time waiting to put bits into the channel) will be drastically reduced. 5G moves up the electromagnetic spectrum to make use of millimeter waves (mmWave), which have much greater capacity but poorer propagation characteristics. These millimeter waves can be easily blocked by a wall, or even a person or a tree. Therefore, operators will use a combination of low, mid, and high range spectrum to support different use cases.

The mid- to long-term solution to propagation restrictions is that 5G will require a network of small cells as well as the cell towers to support them (NG-RAN architecture). Small cells can be located on lampposts, sides of buildings, and also within businesses and public buildings. They will enable the ‘densification’ of networks, broadcasting high capacity millimeter waves primarily in urban areas. Because optical fibre may not be available at all sites, wireless backhaul will be a common option for small cells.

Edge computing will further support this near-user vision. Using off-the-shelf servers, and smaller data centres closer to the cell towers, edge computing can ensure low latency and high bandwidth.

“As latency requirements get lower and lower, it becomes more and more important to bring interconnection services as close to people and businesses as possible, everywhere. Latency truly is the new currency for the exciting next generation of applications and services”

—Ivo Ivanov, CEO of DE-CIX International

What is network slicing?

The key innovation enabling the full potential of 5G architecture to be realised is network slicing. This technology adds an extra dimension by allowing multiple logical networks to simultaneously overlay a shared physical network infrastructure. This creates end-to-end virtual networks that include both networking and storage functions.

Operators can effectively manage diverse 5G use protocols with differing throughput, network latency and availability demands by ‘slicing’ network resources and tailoring them to multiple users.

What is realistic progress for 5G in 2022?

According to the California-based company Grand View Research, the global 5G infrastructure market size —valued at $1.9bn in 2019— is projected to reach $496.6bn by 2027.

There are however significant costs associated with 5G roll-out, as well as complications arising from planning regulations (for small cells in the UK alone, separate planning applications have to be files for each cell) and the need to alleviate public health fears about the technology.

There is still also the issue of digital equality (conquering the digital divide). There is a risk the divide could widen further if 5G services are concentrated only in cities, as economics will almost certainly dictate.

The EU recently announced their Path to the Digital Decade, a concrete plan to achieve the digital transformation of society and the economy by 2030.

Read more about the Path to the

Digital Decade.

“The European vision for a digital future is one where technology empowers people. So today we propose a concrete plan to achieve the digital transformation. For a future where innovation works for businesses and for our societies. We aim to set up a governance framework based on an annual cooperation mechanism to reach targets in the areas of digital skills, digital infrastructures, digitalisation of businesses and public services.”

—Margrethe Vestager, Executive Vice President for ‘A Europe Fit for the Digital Age’

5G has been dubbed by some as the next industrial revolution. If all the technologies that it intends to drive are realised within the next decade, that could certainly be the case. What is achievable in the short-term, however, is less clear and progress could be slowed by infrastructural barriers and rising costs.

As we head into 2022 there needs to be significant work to upgrade legacy systems to integrate with the rollout of 5G and an acceleration laying fibre optic cables to deal with the backhaul of data from the proliferation of 5G cells.

While 5G leads the technological improvement of the network, lowering latency at the network edge also needs to be a primary goal and operators must focus on latency as one element (albeit it a key element) of a holistic strategy to improve the mobile internet experience (and measure this against a robust QoE framework).

Contributors

Thanks to Ivo Ivanov, CEO of DE-CIX International; Charles Orsel des Sagets, Managing Partner, Cambridge MC; Eric Green, Senior Partner, Cambridge MC; and Tim Passingham, Chairman, Cambridge MC, who all made contributions to this article. Special thanks to Ivo Ivanov, for his quotes.

Thanks to Karl Salter, web designer and graphic designer, for infographics.

You can find out more about Ivo Ivanov on LinkedIn and DE-CIX via their website.

Read bios for Charles Orsel des Sagets, Tim Passingham and Eric Green.

About Us

Cambridge Management Consulting is a specialist consultancy drawing on an extensive network of global talent. We are your growth catalyst, assembling a team of experts to focus on the specific challenges of your market.

With an emphasis on digital transformation, we add value to any business attempting to scale by combining capabilities such as marketing acceleration, digital innovation, talent acquisition and procurement.

Founded in Cambridge, UK, we created a consultancy to cope specifically with the demands of a fast-changing digital world. Since then, we’ve gone international, with offices in Cambridge, London, Paris and Tel Aviv, 100 consultants in 17 countries, and clients all over the world.

Find out more about our SD-WAN and network architecture consultancy services.

Find out more about our digital transformation services and full list of capabilities.

Subscribe to our insights

Blog Subscribe